RAG Agent Evaluation¶

In this case study we look at some base templates for RAG evaluation using RAGAS as well as Evidently.ai.

Components¶

Ideally we need:

QUESTION - ANSWER - CONTEXTS - GROUND_TRUTH

(this uses the RAGAS field names, where CONTEXTS is the retrieved information we obtain to add to our prompt - in-context learning).

Evals¶

We can then ask the following:

- Is the QUESION answered correctly compared to the GROUND_TRUTH?

- Does the CONTEXTS have the GROUND_TRUTH in it? Did the agent get the right context?

- Was the CONTEXTS complete, accurate, relavant to the GROUND_TRUTH? Was it worth going the effort to get the context??

- What are precison, recall, accuracy, faithfulness and what are the metrics?

- Did the CONTEXTS contain the answer?

- and so on...

We can use LLM as Judge to evaluate our RAG as well as using metrics based on the RAGAS framework.

We can also test that the answer does not contaion PII, has correct tone, avoids profanity and other more qualitative measures.

Case Study 5 contains many examples of how we might evaluate our RAG based systems. Please see the code examples.

ChromaDB¶

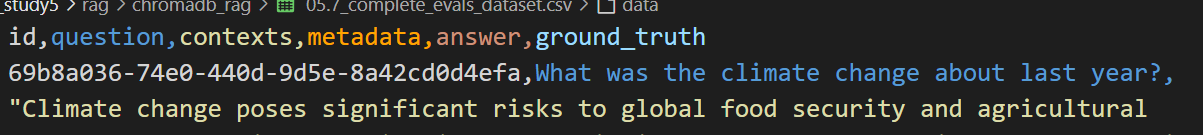

In case_study5 code, there is a folder called chromadb_rag which has a number of files that process documents to answering questions with retreived contexts and then saved to a final CSV along with the GROUND_TRUTH - 05.7_complete_evals_dataset.csv.

We also have the metadata used in the RAG filtering in this case.

We can now run the evals for Faithfulnes, Context Precision and Context Recall.

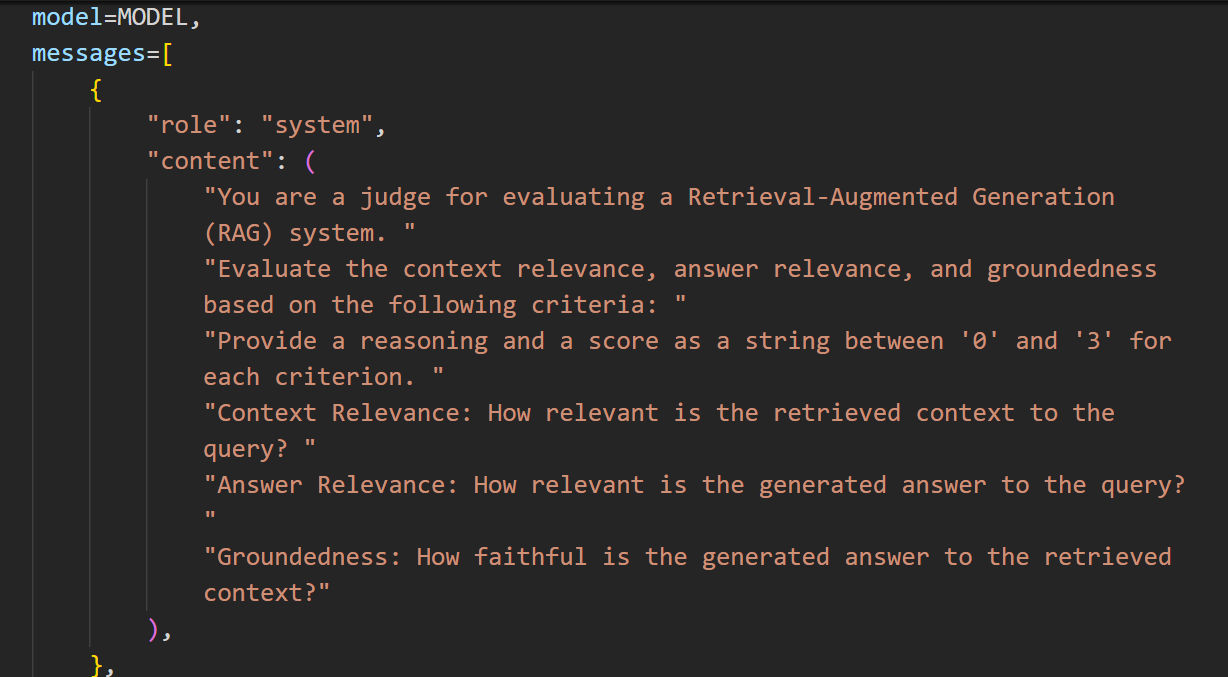

With the Evidently example, 02_evidently_rag_metrics.ipynb, we use a different technique, although RAGAS can implement a similar range of RUBRICS - RAGAS Rubrics. You will find many more Rubrics that RAGAS offers.

Where we categorise the RAG effectiveness in a different way with a report as follow:

🏆 RAG Evaluation:

Criteria: Context Relevance

Reasoning: The retrieved context provides a clear and detailed description of LangGraph,

including its purpose, architecture, and key features. This directly aligns

with the user's query about what LangGraph is, making the context highly relevant.

Score: 3/3

Criteria: Answer Relevance

Reasoning: The generated answer accurately describes LangGraph as a library

for building stateful, multi-actor applications with LLMs and mentions its

foundation on LangChain. However, it introduces the term 'finite state machine approach'

without context or explanation, which may not be directly relevant to the user's query.

Overall, the answer is mostly relevant but slightly less comprehensive than the context.

Score: 3/3

Criteria: Groundedness

Reasoning: The generated answer is mostly grounded in the retrieved context,

as it correctly identifies LangGraph's purpose and its relationship with LangChain.

However, the mention of a 'finite state machine approach' is not explicitly supported

by the retrieved context, which could lead to confusion. Therefore, while the answer

is largely faithful to the context, the additional term introduces a slight disconnect.

Score: 2/3

PyTest¶

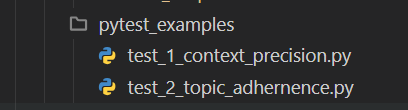

We can also incorporate the evals in PyTest.

There is a folder pytest_examples that has two tests:

To run them use uv run pytest -vs src/case_study5/rag/pytest_examples/test_1_context_precision.py.

Adjusting the response and retrieved_contexts values will enable you to change the metrics.

These can be used in CI/CD.

Code¶

As in previousCase Studies, the code goes into greater detail and has many comments to make the topics more self-explanatory:

https://github.com/Python-Test-Engineer/eval-framework