Libraries¶

There are a number of ways we can evaluate the performance of the app.

These use traditonal Natural Language Processing (NLP) and Machine Learning (ML) techniques.

There are a number of frameworks for evaluations.

Evidently.ai and RAGAS are two that I like to work with.

These are used in the evaluation framework.

Evidently.ai¶

https://github.com/evidentlyai/community-examples

RAGAS¶

https://docs.ragas.io/en/stable/

Imagine you're asking your mom a question about dinosaurs, and she needs to look through some books to give you an answer!

Context Precision is like asking: "Did mom pick the RIGHT books?" - If you ask "What did T-Rex eat?" and mom grabs a dinosaur book, that's good! - But if she grabs a cookbook instead, that's not very precise - wrong book for your question!

Context Recall is like asking: "Did mom find ALL the important books she needed?" - Maybe there are 3 different dinosaur books that could help answer your question - If mom only found 1 book but missed the other 2 important ones, that's not good recall - Good recall means she found all the helpful books!

Faithfulness is like asking: "Did mom tell you exactly what the books said, or did she make stuff up?" - If the book says "T-Rex ate meat" and mom tells you "T-Rex ate meat," that's faithful! - But if the book says "T-Rex ate meat" and mom tells you "T-Rex ate ice cream," that's not faithful - she's making things up that weren't in the book!

So when we use computers to answer questions (like ChatGPT), we want them to: - Pick the right information (precision) - Find all the important information (recall) - Only tell us what's actually in their sources, not make things up (faithfulness)

It's like having a really good helper who finds the right books, doesn't miss any important ones, and tells you exactly what they say!

RAG Metrics¶

Context Precision¶

This is a metric that measures the proportion of relevant chunks in the retrieved_contexts. It is calculated as the mean of the precision@k for each chunk in the context. Precision@k is the ratio of the number of relevant chunks at rank k to the total number of chunks at rank k.

Context Recall¶

Context Recall measures how many of the relevant documents (or pieces of information) were successfully retrieved. It focuses on not missing important results. Higher recall means fewer relevant documents were left out. In short, recall is about not missing anything important. Since it is about not missing anything, calculating context recall always requires a reference to compare against.

Faithfulness¶

Faithfulness metric measures the factual consistency of the generated answer against the given context. It is calculated from answer and retrieved context. The answer is scaled to (0,1) range. Higher the better.

The generated answer is regarded as faithful if all the claims made in the answer can be inferred from the given context. To calculate this, a set of claims from the generated answer is first identified. Then each of these claims is cross-checked with the given context to determine if it can be inferred from the context.

Other evaluations¶

There are many libraries for evaluating the perfromance of our app, like Giskard and Huggingface.

Domain Expert Evaluation¶

Ultimately, the real test is that of the domain expert and user not metrics.

A Human In The Loop will always be needed even if to just provide the sample data for LLM Judge training.

However, to curate a set of 30-50 QnA from a Domain Expert does not take long in the sceme of things and will provide robust evaluation.

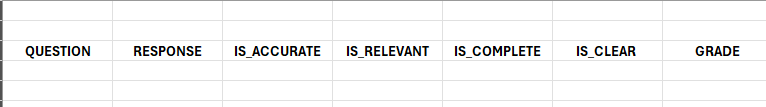

Here, we have a set of questions and answers and we want to evaluate the performance of the app.

We can create a set of 'ground truths', questions and their correct answer set up by domain experts.

We can then have our app generate answers and context to these questions for a domain expert to assess, not just the accuracy but also whether the context returned was relevant: